Can AI be Fair?

Introduction

You might expect machines to be free from human prejudice. Unless, that is, you’ve heard of algorithmic bias — the tendency of artificial intelligence (AI) to replicate human biases, leading to unfairness and discrimination.

A notorious algorithm used in the US criminal justice system to predict risk of re-offending was “biased against blacks”, news outlet ProPublica uncovered. An MIT study of commercial face-recognition software revealed significant gender and skin-type bias. Meanwhile, an HR tool piloted by Amazon to screen job applicants’ resumes was decommissioned after it was shown to penalize women.

Biased algorithms don’t just mirror human biases, they also entrench existing disadvantages in society. This was the finding of a Pew Research poll of 1,302 experts and corporate practitioners. Biased algorithms “tend to punish the poor and the oppressed”, as Cathy O’Neil, author of the popular book Weapons of Math Destruction, puts it.

Policymakers are playing catch-up with this emerging problem, which is fast becoming a top-priority legal issue on both sides of the Atlantic. Businesses need to comply with this evolving body of discrimination law, protect their customers and mitigate reputation risk. However current technology is letting them down.

Why are algorithms biased?

Algorithmic bias stems from the biases of tech developers and society at large, but certain AI systems are more susceptible to being contaminated than others. The problem goes to the foundations of machine learning (ML). A core assumption of ML is that the training data closely resembles the test data — that the future will be very similar to the past. Given that the past is replete with racism, sexism and other kinds of discrimination, this assumption can lead ML systems to perpetuate historical injustices.

ML assumes that the future will be very similar to the past — but unfortunately, the past is replete with racism and sexism

To compound this problem, powerful ML algorithms like neural networks are “black boxes”: as and when they do exhibit bias it can be difficult or impossible to find out why, and debug the system.

Causal AI — a new approach to AI that can reason about cause and effect — is free from these problems, and can help towards de-biasing algorithms. Causal AI can envision futures that are decoupled from historical data, enabling users to eliminate biases in input data. Causal models are also inherently explainable, empowering domain experts to interrogate their biases and design fairer algorithms.

Patches for machine learning

How can tech developers prevent algorithmic bias? As a toy example, take a bank deploying an ML algorithm to make mortgage loan decisions.

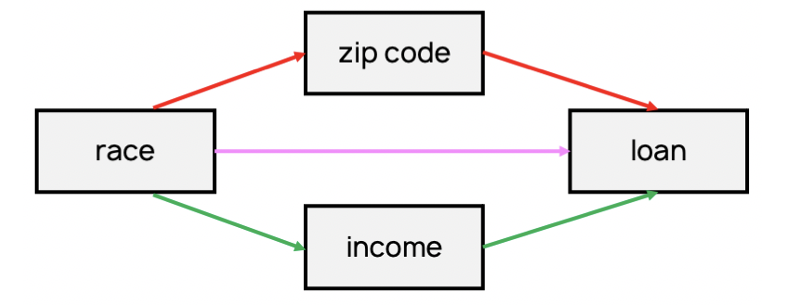

Suppose they have a data set with some features — zip_code, income — a “protected characteristic” — race — and a binary label — default. The bank trains a neural network to distinguish customers who will make repayments on time from those who will default.

However given the documented history of racial discrimination in mortgage lending, there is a risk that the algorithm will project that history onto future loan applicants. Indeed, in the US, FinTech companies using standard ML algorithms and data analytics charge otherwise-equivalent Hispanic and African American borrowers significantly higher rates.

There is no way to tell if the algorithm is taking loan decisions in accordance with the bank’s legitimate business purpose by using features like income, that happen to correlate with race, or if it is illegally “redlining” applicants via a proxy feature like zip_code.

How can the bank ensure their algorithm is fair? For that matter, what even is fairness? Researchers have proposed an explosion of “fairness criteria”, many of which fall into three broad categories:

- Fairness through unawareness: ignore race

- Parity: give applicants from different races the same chance of being granted a loan

- Calibration: grant each racial group a loan with equal reliability

These fairness criteria may seem reasonable, but each has flaws. For instance, the naive “fairness through unawareness” approach of simply deleting race from the data unfortunately doesn’t work. This is because the algorithm recovers the withheld information from zip_code and income, which continue to correlate with it.

Troublingly, the criteria are also incompatible with each other. For instance, it’s impossible for a calibrated algorithm to also achieve parity. If the bank wants to apply these criteria to their mortgage application algorithm, they will confront hard choices as to how they should be traded off and prioritized. These choices can’t be made by the neural network without an understanding of business context. Moreover, the bank will face profound challenges as to how to prevent covert discrimination.

Causality-based solutions

Focussing on causes instead of correlations provides a different approach to algorithmic bias that promises to overcome the problems that conventional ML runs into. By distinguishing between correlation and causation, Causal AI can disentangle different kinds of discrimination that are conflated in ML algorithms. Moreover, these different kinds of discrimination are rendered in an easily readable and intuitive causal diagram.

More fundamentally, Causal AI is not based on the assumption that the future will be very similar to the past. The AI is equipped with powerful tools that enable it to evaluate regime changes or “interventions”, and to “imagine” how the world might have been different. These modelling tools are typically used to conduct virtual experiments and answer “why”-questions. But they can be deployed to ascertain the impact of race on the mortgage loan system, and then eliminate that impact.

For example, Causal AI can evaluate whether a given loan decision would have changed had the applicant’s race been different — even if that combination of factors is different from anything seen before in the training data.

While causality provides some of the most promising new approaches to reducing algorithmic bias, it should be noted that there is not a one-size-fits-all solution to the problem. As a recent UK government report highlights, “the ways in which algorithmic bias is likely to be expressed are highly context-specific”. Companies don’t need a grand unified theory of fairness, so much as they need domain experts who understand what fairness amounts to in the context of their business and market.

Causal AI provides powerful modelling tools for de-biasing algorithms, and it empowers domain experts to make algorithms fairer

Perhaps the greatest advantage of causal approaches to fairness is that, unlike standard machine learning algorithms, causal models are highly explainable and transparent. The technology empowers domain expert to directly interact with causal models to ensure that the system is, by their reckoning, fair and unbiased. Experts can understand and engage with causal models before they have been trained on data, ensuring that the AI doesn’t learn to unfairly discriminate in production. Moreover, Causal AI enables the bank to give applicants an explanation as to why their loan has been denied —something that they deserve, and that’s enshrined in EU law as a “right to explanation”.

We are only beginning to understand and grapple with problems of social justice, and we can’t expect machines to solve them outright. However, while some algorithms exacerbate societal ills, Causal AI can be a key ally in understanding and addressing them.