Develop a Causal Model with our Decision Trees

Bring causality to your tree based models.

Tree-based models are some of the most popular algorithms today, and with good reason. They excel at handling some of the most complex data science cases: easy to understand and interpret, and accommodate both categorical and numerical data.

decisionOS extends tree-based models with the causaLens decision tree (CLDT), allowing you to deliver enhanced business benefit:

- Causal regularization: Your CLDT respects your use case’s causal relationships preventing overfitting.

- Linear leaf models: The leaves of your tree can be fit with linear models, improving performance and enabling explainability.

- Support for boosting and bagging: Don’t compromise when using CLDTs with full support for advanced methods such as boosting and bagging.

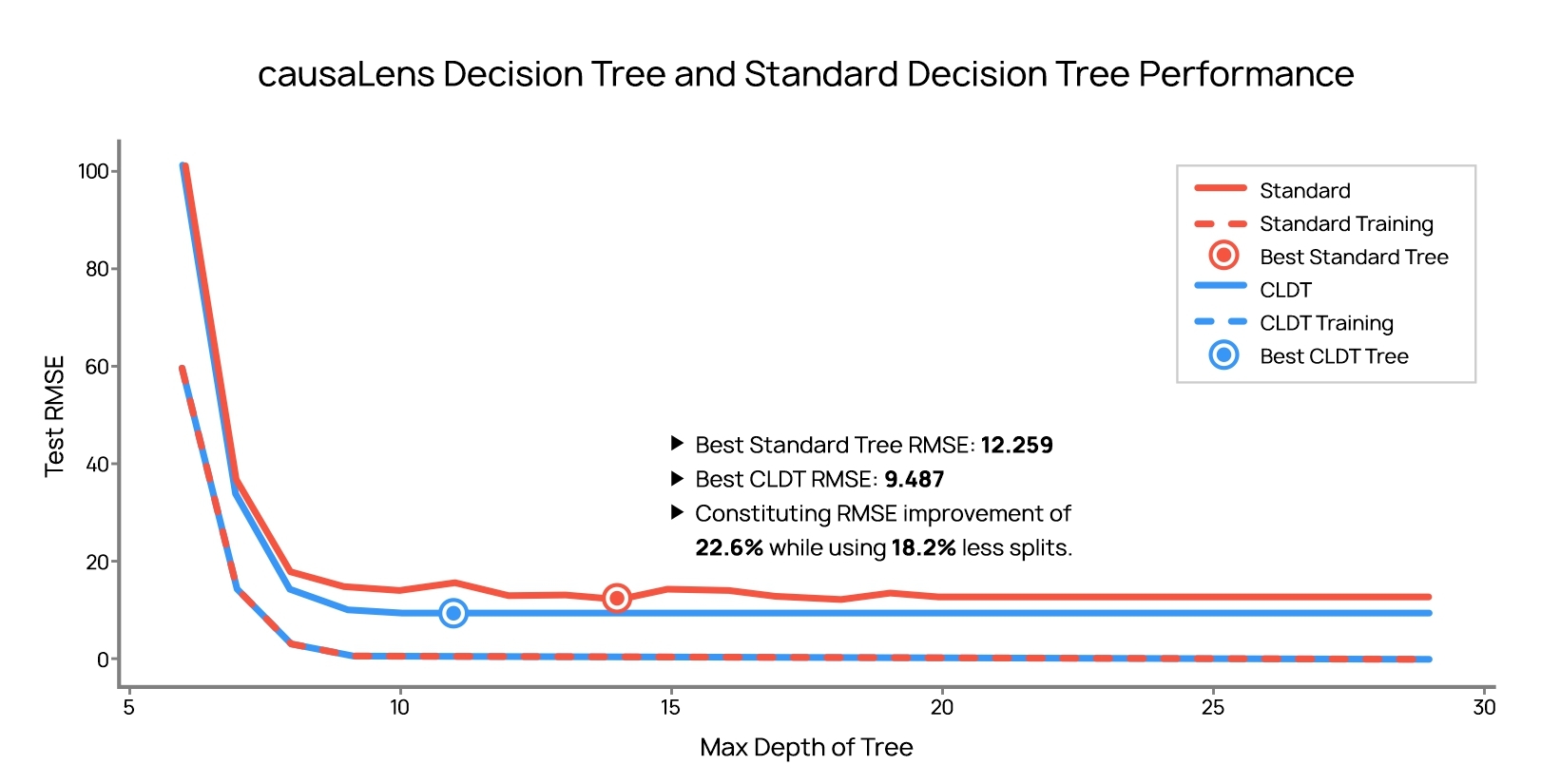

Use causality to fight overfitting and boost performance.

Traditional tree-based models are powerful but prone to overfitting- especially when using boosting or bagging techniques.

causaLens decision trees (CLDTs) use causal knowledge to constrain the structure of the tree. This helps to prevent overfitting by removing any reliance on spurious correlations, resulting in a simpler, more interpretable model- without compromising on performance.

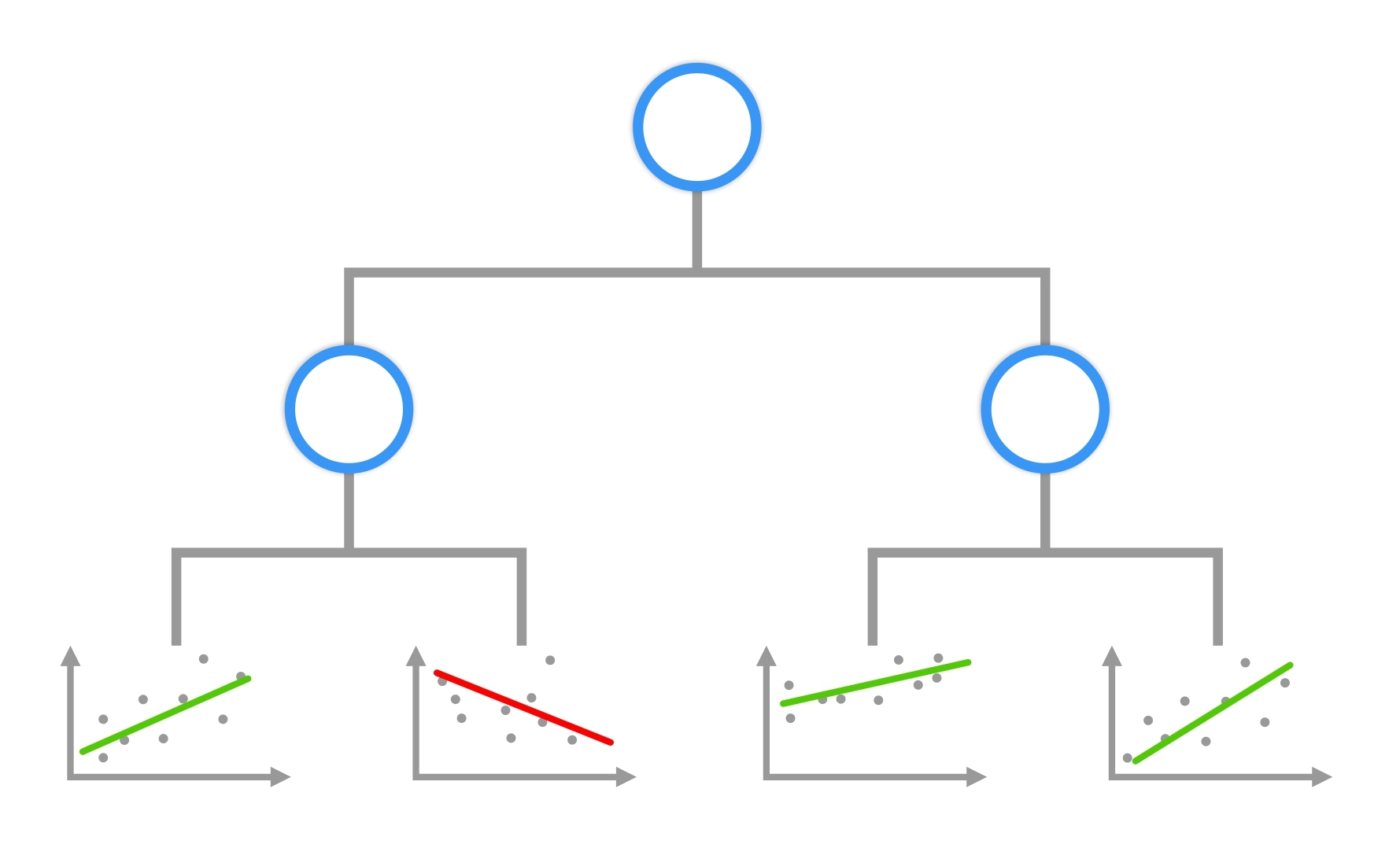

Leverage linear models to maximize benefits.

causaLens decision trees support the fitting of linear models to the leaf nodes of your model.

These linear models can then be used to calculate individual treatment effects. This enables you greater flexibility in solving your business challenges.

Deliver with confidence.

Don’t compromise on your results when using causaLens Decision Trees.

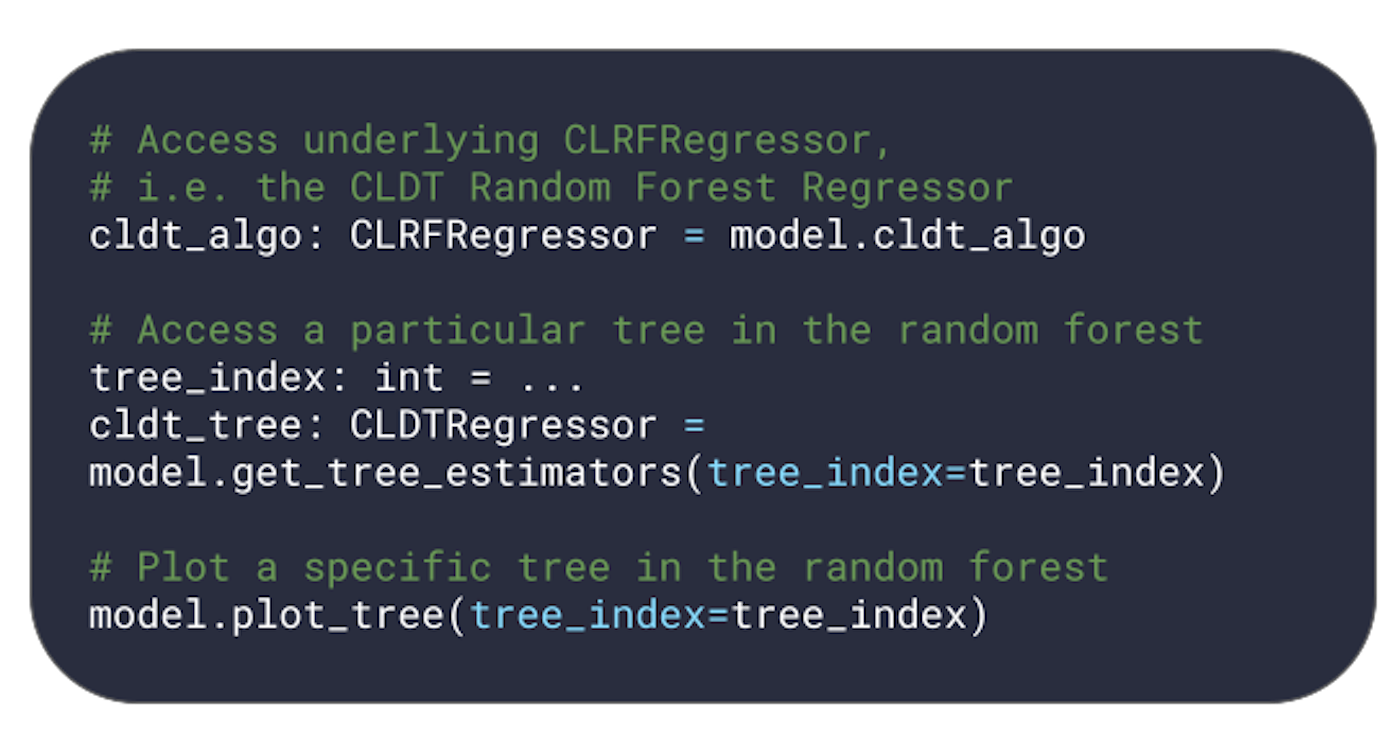

CLDTs support bagging and boosting natively and can be used to train a range of tree-based models for both classification and regression challenges:

- Decision Trees

- Random Forests

- Gradient Boosting Machines

- Natural Gradient Boosting Machines